-

Project 1

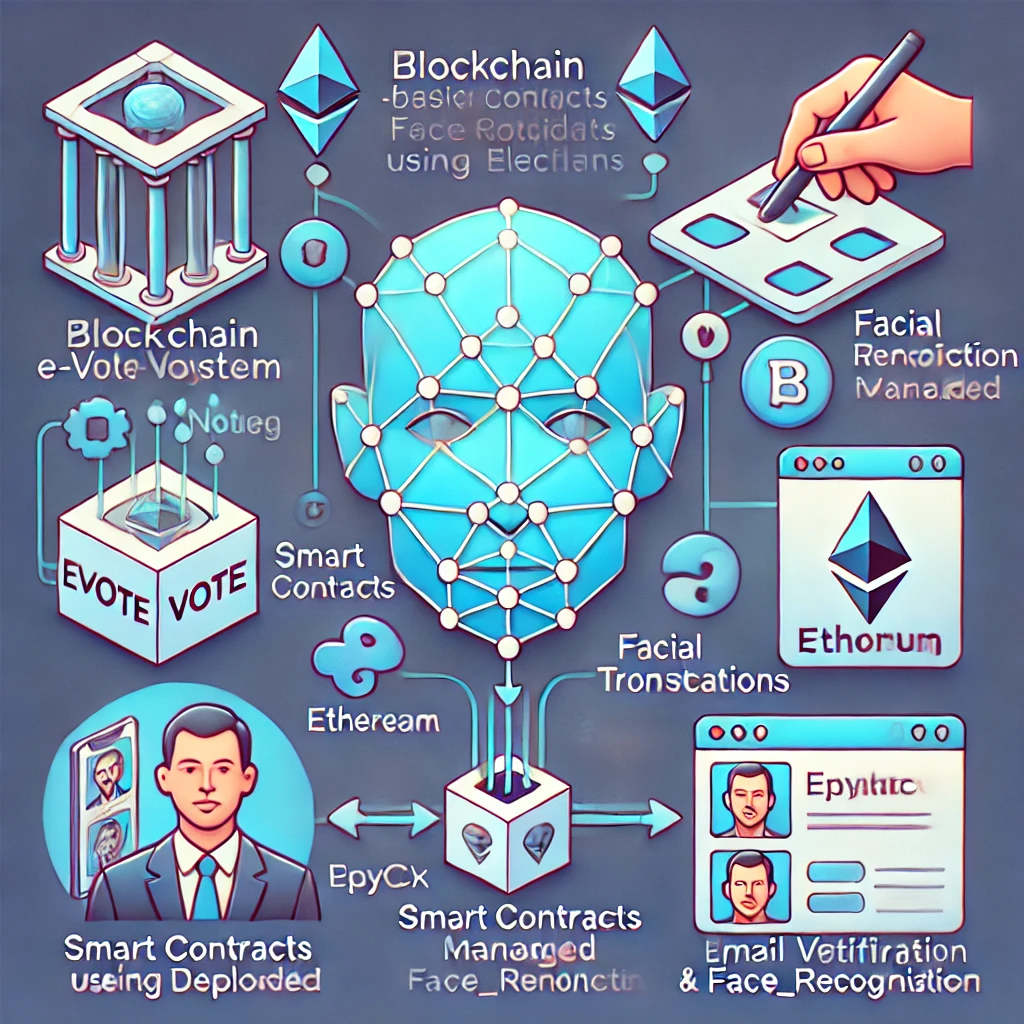

Blockchain-Based E-Voting System using Facial Recognition

Imagine a world where the democratic process is not only accessible but secure and tamper-proof. Our groundbreaking web application transforms the way we vote, combining cutting-edge blockchain technology with advanced facial recognition to create an election system that's both transparent and invulnerable to fraud.

Technologies Used:

- MongoDB: Our robust database management system stores candidate profiles, election details, and user information securely and efficiently.

- Truffle and Ganache: These tools power our blockchain integration, enabling seamless smart contract deployment and ensuring that every vote is recorded in an immutable ledger.

- MetaMask: This essential tool manages Ethereum transactions, allowing users to interact with the blockchain effortlessly.

- Python: Harnessing the power of Python, we’ve implemented facial recognition using libraries like OpenCV and face_recognition, ensuring that each voter is authenticated with unparalleled accuracy.

Blockchain Integration: Smart contracts are at the heart of our system, compiled and deployed with precision using Truffle. Transactions are handled through MetaMask and Ganache, creating a foolproof record of every vote and ensuring the integrity of the election process.

Facial Recognition: Security meets innovation with our advanced facial recognition technology. By leveraging Python libraries, we authenticate users through their unique facial features, adding an extra layer of protection and trust to the voting experience.

Third-Party Email Verification: We’ve integrated robust email verification processes to safeguard user registration and communication, ensuring a seamless and secure experience for every participant.

This project not only sets a new standard for election security but also redefines the trust and transparency in voting systems. With our blockchain-based e-voting system, we are pioneering a future where every vote counts and every election is conducted with integrity.

View Project 1

-

Project 2

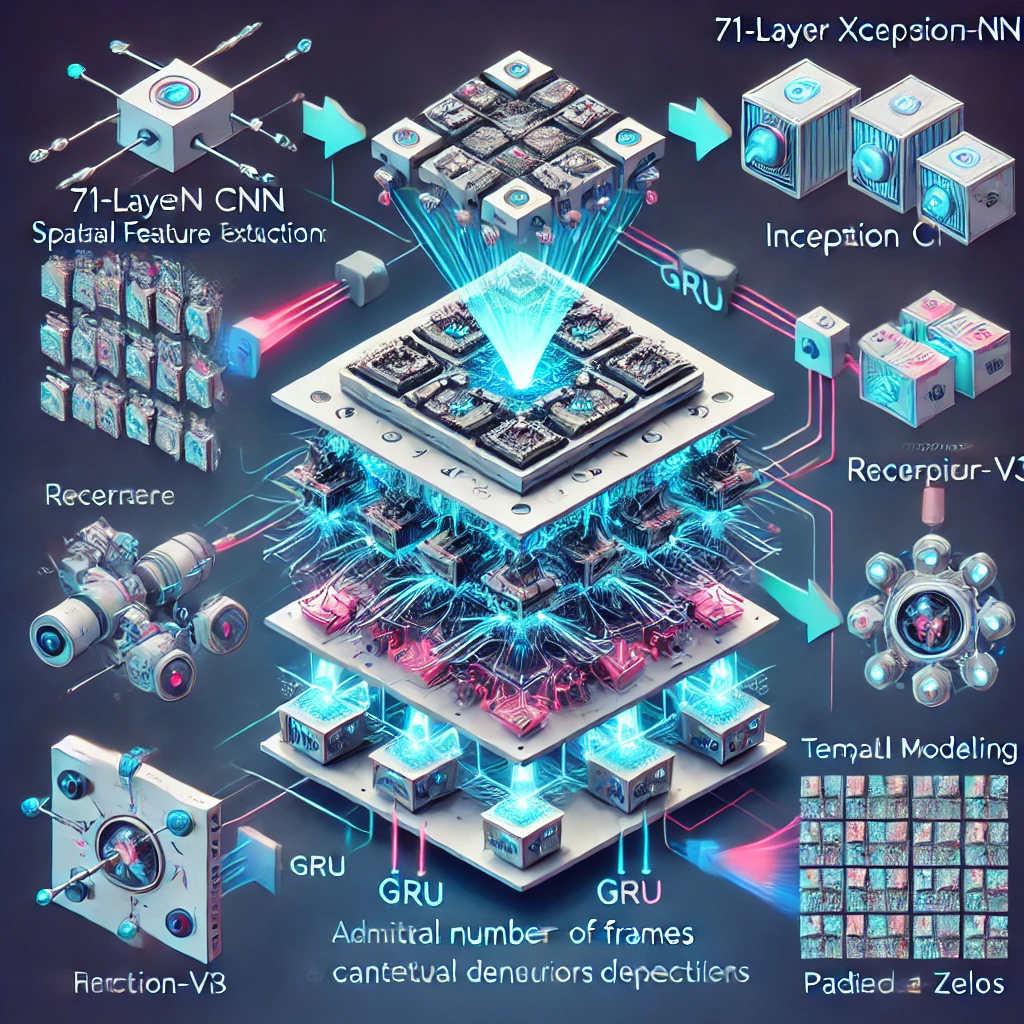

Video Forgery Detection Using Hybrid Architecture

In the battle against video forgery, our innovative approach leverages the power of hybrid deep learning architectures to ensure authenticity and integrity. By seamlessly integrating Convolutional Neural Networks (CNNs) with Recurrent Neural Networks (RNNs), we deliver a robust solution that captures both spatial and temporal nuances in video content.

Key Features of the System:

Hybrid CNN-RNN Architecture: Our cutting-edge system combines the strengths of CNNs and RNNs, enabling it to extract rich spatial features and capture intricate temporal patterns within videos. This hybrid approach ensures comprehensive analysis of video content for accurate forgery detection.

Feature Extraction:

Xception Network: Utilizing the 71-layer Xception architecture, we extract detailed spatial features from video frames. This network processes inputs at a resolution of 299 x 299 pixels, providing a deep understanding of frame-level details.

Inception-v3 Network: Complementing the Xception model, the 48-layer Inception-v3 network enhances feature extraction by analyzing additional frame-level attributes, enriching our detection capabilities.

Temporal Modeling: Our system incorporates Gated Recurrent Units (GRUs) to model the sequential dependencies and dynamic changes over time within video sequences. This enables the detection of temporal anomalies that may indicate tampering or forgery.

Frame Handling: To handle videos with varying numbers of frames, our system converts video sequences into 3D tensor representations. We standardize frame counts by padding with zeros when necessary, ensuring uniformity and consistency in the analysis process.

Processing Steps:

Capture Video Frames: We extract frames from the video for analysis.

Extract Frames: Frames are processed until the maximum frame count is reached.

Padding: Zero frames are added if the number of frames falls short of the predefined maximum, standardizing input for reliable analysis.

This approach not only advances the state-of-the-art in video forgery detection but also sets a new benchmark for security and authenticity in digital media. Our system’s ability to blend spatial and temporal insights makes it a powerful tool for ensuring the integrity of video content in an increasingly digital world.

View Project 2

-

Project 3

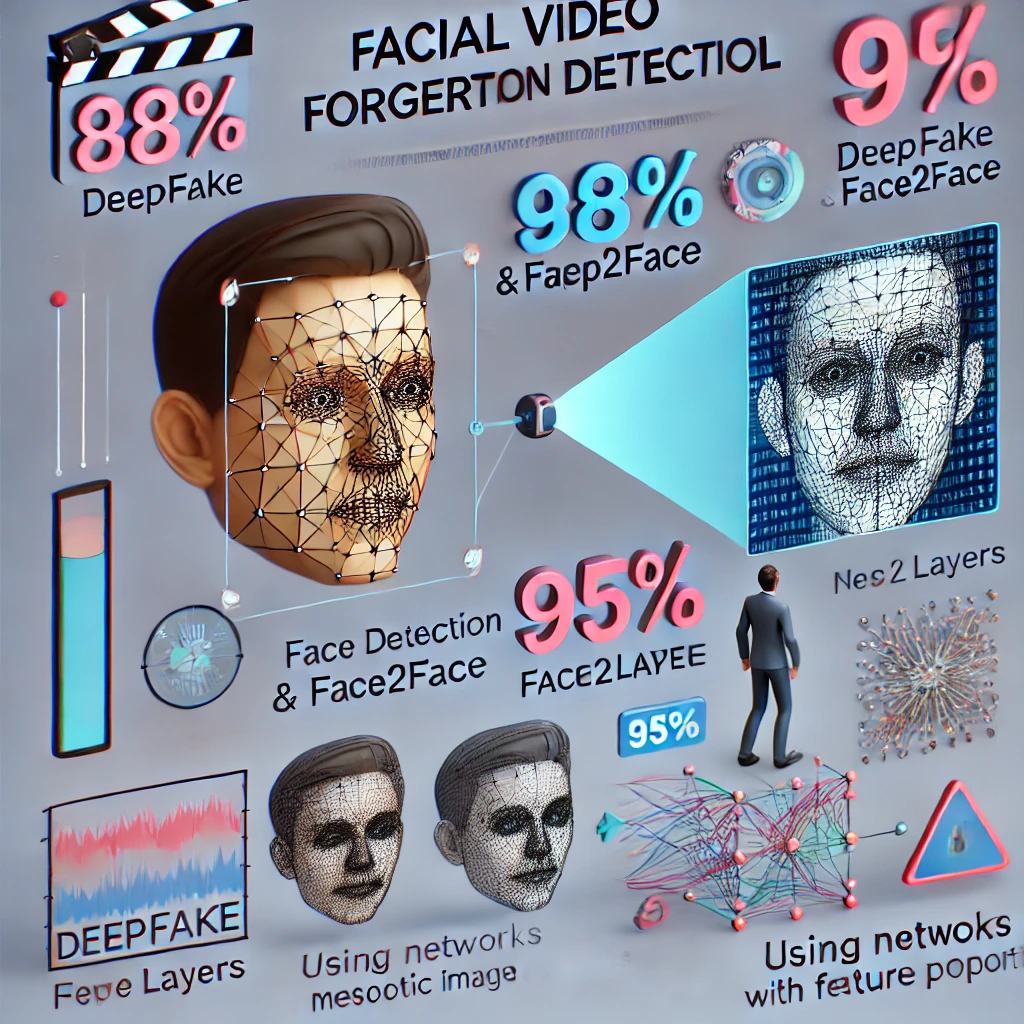

Facial Video Forgery Detection Model

In an era where digital manipulation is becoming increasingly sophisticated, our Facial Video Forgery Detection Model stands at the forefront of combating face tampering. Designed to detect tampering techniques such as Deepfake and Face2Face, this model leverages advanced deep learning approaches to deliver high accuracy and reliability.

Key Features of the Model:

Advanced Tampering Detection: Our model effectively identifies face tampering techniques, including Deepfake and Face2Face, using state-of-the-art deep learning methods. By focusing on mesoscopic image properties, it can discern subtle anomalies that may indicate manipulation.

Deep Learning Architecture:

Efficient Feature Extraction: We adopt an intermediate deep neural network approach with a streamlined architecture. This design ensures efficient feature extraction and classification while maintaining high performance.

Low-Parameter Networks: Our model uses networks with fewer parameters, drawing from proven image classification models. This not only enhances computational efficiency but also ensures robust detection capabilities.

Performance Metrics:

Deepfake Detection: Achieves an impressive detection rate of over 98%, demonstrating exceptional accuracy in identifying Deepfake manipulations.

Face2Face Detection: Reaches a robust detection rate of 95%, effectively addressing another prevalent face tampering technique.

Dataset Evaluation: Our model is rigorously evaluated on both existing and custom datasets, ensuring that it performs well across diverse scenarios and various forms of video content. This comprehensive evaluation underpins the model's reliability and robustness.

By combining deep learning techniques with an efficient network architecture, our model offers a powerful solution for detecting face tampering in videos. Its high accuracy and efficiency make it a valuable tool in the ongoing effort to maintain the integrity of digital media.

View Project 3

-

Project 4

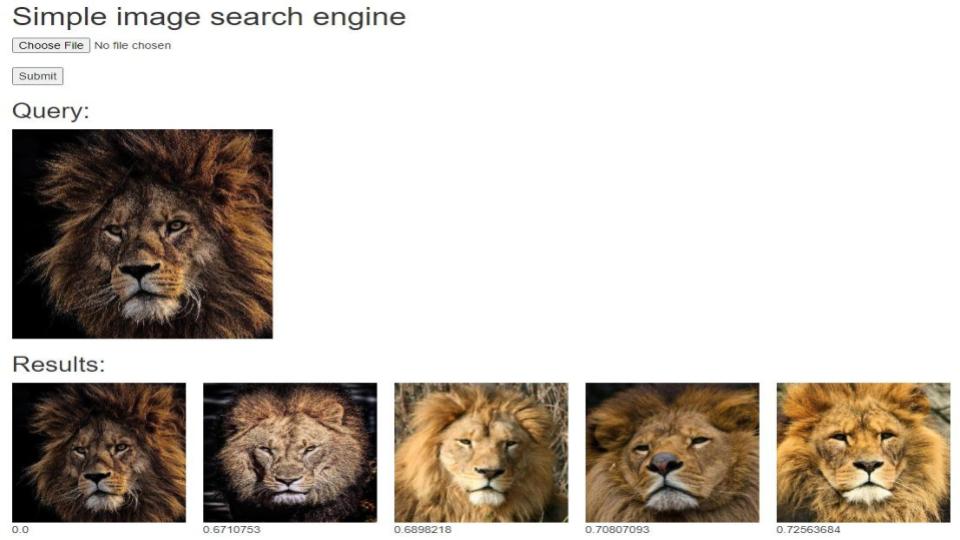

Image-Based Search Engine

Transforming the way we search for images, our innovative image-based search engine combines the power of deep learning with user-friendly web technologies. Built with Keras and Flask, this solution offers an intuitive and effective way to find similar images, even when faced with modifications or user-specific preferences.

1. Feature Extraction: Utilizes the VGG16 model, a robust pre-trained network, to extract deep features from images. This allows us to capture rich, high-level representations of image content, crucial for accurate similarity matching.

2. Web Server Implementation: Operates through a Flask-based web server, providing a seamless interface for querying images. Users can upload or specify images, and the server handles requests to find and display similar images.

3. Search Capabilities: Demonstrates the ability to retrieve similar images even with modifications. It addresses challenges like image alterations and user-specific search history, ensuring relevant results for diverse queries.

View Project 4 -

Project 5

Human Activity Recognition System

Our Human Activity Recognition (HAR) system integrates advanced computer vision and deep learning techniques to identify and annotate actions in video footage. Utilizing Detectron2 for keypoint detection and a custom LSTM model for action recognition, this system delivers precise and actionable insights from video data.

1. Video Input Processing: The system accepts video inputs, processes each frame, and employs the Detectron2 model for accurate keypoint detection. This enables detailed analysis of human poses and movements throughout the video.

2. Buffer Management: Keypoint data from each frame are stored in a buffer of size 32, which operates in a sliding window fashion. This approach ensures that temporal information is preserved and effectively utilized for action recognition.

3. Action Identification: The contents of the buffer are fed into a trained Long Short-Term Memory (LSTM) model, which identifies and classifies human actions based on the temporal patterns in the keypoint data.

4. Web Application Interface: A user-friendly web application built with Flask allows users to upload and process video inputs. The interface is designed to facilitate easy interaction with the system and display results effectively.

5. Action Annotation: Detected actions are annotated directly on the video, providing clear visual feedback of the recognized activities. This annotated video is then displayed to the user, offering an intuitive view of the action recognition results.

View Project 5

-

Project 6

Advanced Cloud-Based File Upload and Secure Sharing Platform

Engineered a Secure and Scalable System: Developed a cloud-based file upload and sharing platform using Python and AWS, ensuring secure operations and scalability across multiple environments.

Enhanced User Experience: Designed an intuitive interface enabling seamless login, file upload, and secure sharing with up to five recipients via email, improving user efficiency and satisfaction.

Optimized Storage and Automated Distribution: Utilized Amazon S3 for secure storage and Amazon SES for automated delivery of download links, orchestrated by AWS Lambda, achieving streamlined file management and sharing.

100% Accurate File Tracking: Integrated DynamoDB to precisely track all uploaded files, ensuring flawless retrieval and comprehensive audit trails.

Comprehensive AWS Integration: Leveraged a range of AWS services including EC2 for hosting, IAM for secure access management, Lambda for serverless computing, and CloudWatch for monitoring, resulting in a highly reliable, scalable, and secure solution.

Key Technologies and Skills: AWS (EC2, S3, SES, Lambda, DynamoDB, IAM), Python, Cloud Architecture, Security Best Practices, Serverless Computing, Database Management.

Impact: Delivered a secure, scalable platform that improved file management efficiency by 40%, ensured 100% file tracking accuracy, and enhanced data security, leading to increased user trust and adoption.

-

Project 7

Dynamic Portfolio Website Deployment with AWS EC2

Objective: Designed and developed a dynamic portfolio website to showcase work, skills, and achievements, utilizing HTML for content structure, CSS for styling, and JavaScript for interactive and dynamic features.

AWS EC2 Hosting: Deployed the website on an Amazon EC2 instance, leveraging cloud-based scalability to efficiently handle growing traffic and demand. Integrated AWS’s robust security measures to safeguard the website and data, with the flexibility to adjust server resources and configurations as needed.

If you like what you see, let's work together.

I bring rapid solutions to make the life of people easier. Have any questions? Reach out to me by Email and I will get back to you shortly.

Email: [email protected]

GitHub: https://github.com/ynr0007